A computer system that recognizes a user’s emotional state

November 22, 2011

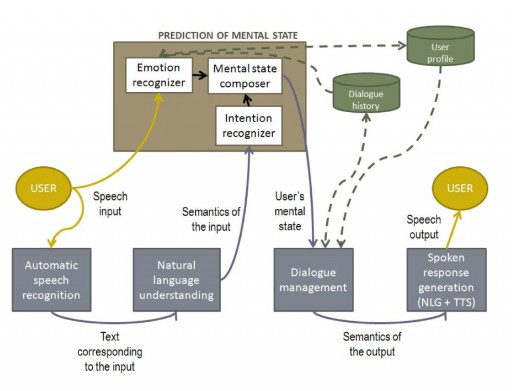

Integration of mental-state prediction in the architecture of a spoken dialogue system (credit: Universidad Carlos III de Madrid and the Universidad de Granada)

Scientists at the Universidad Carlos III de Madrid (UC3M) and the Universidad de Granada (UGR) have developed an affective computing system that allows a machine to recognize the emotional state of a person who is speaking to it.

The system can be used to adapt the dialogue to the user’s situation, so the machine’s response is appropriate for the person’s emotional state. It measures 60 different acoustic parameters to detect anger, boredom, and doubt, based on tone of voice, speech speed, duration of pauses, loudness. and other parameters.

Information regarding how the dialogue developed is also used to adjust for the probability that the user was in one emotional state or another. For example, if the system did not correctly recognize what the interlocutor wanted to say several times, or if it asked the user to repeat information that s/he had already given, these factors could anger or bore a user. They also developed a statistical method that uses earlier dialogues to learn what actions the user is most likely to take at any given moment.

Based on detected emotion and intention, the system is able to automatically adapt the dialogue to the situation the user is experiencing. For example, if the user has doubts, more detailed help can be offered, but if bored, such an offer could be counterproductive.

Ref.: Z. Callejas, D. Griol, R. López-Cózar, Predicting user mental states in spoken dialogue systems, EURASIP Journal on Advances in Signal Processing, 2011: 6, pp.1-23 [DOI:10.1186/1687-6180-2011-6] (open access)