A low-cost sonification system to assist the blind

January 15, 2014

(Credit: UC3M)

An improved assistive technology system for the blind that uses sonification (visualization using sounds) has been developed by Universidad Carlos III de Madrid (UC3M) researchers, with the goal of replacing costly, bulky current systems.

How it works

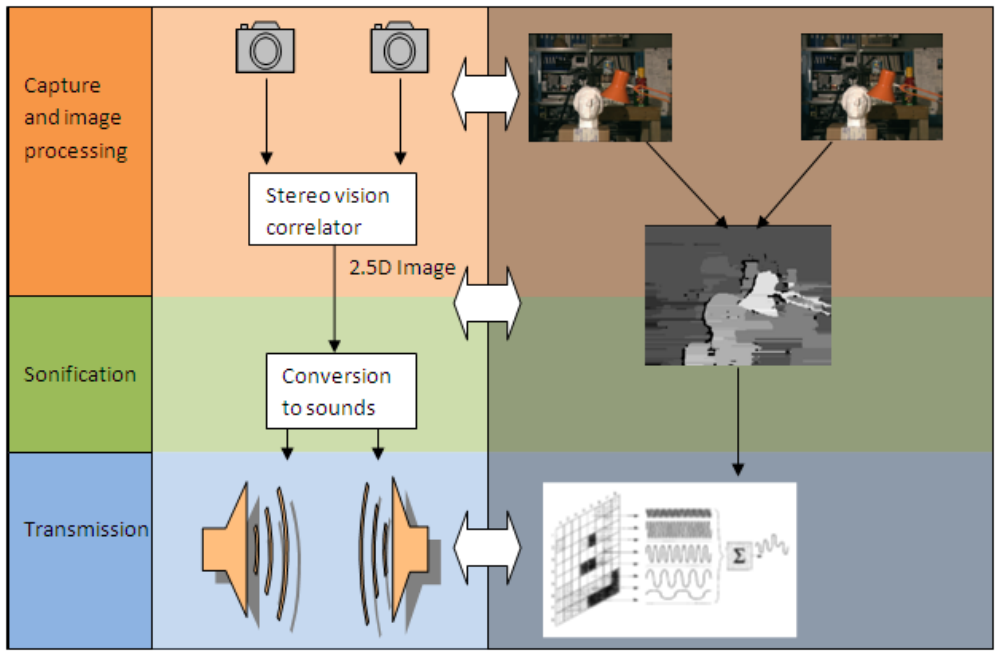

Called Assistive Technology for Autonomous Displacement (ATAD), the system includes a stereo vision processor measures the difference of images captured by two cameras that are placed slightly apart (for image depth data) and calculates the distance to each point in the scene.

Then it transmits the information to the user by means of a sound code that gives information regarding the position and distance to the different obstacles, using a small audio stereo amplifier and bone-conduction headphones.

Assistive Technology for Autonomous Displacement (ATAD) block diagram (credit: P. Revuelta Sanz et al.)

“To represent height, the synthesizer emits up to eight different tones,” said co-developer Pablo Revuelta Sanz, who described the system in a doctoral thesis. In addition, the sounds are laterally located, so that something on the left sounds louder on that side, and vice versa.

Six profiles, ranging from one that is very simple, with a sound alarm that only works when one is going to crash into an obstacle, to others that describe the scene with 64 simultaneous sounds can be chosen.”

The prototype system was tested on 28 individuals, including sighted individuals, persons with limited vision, and blind persons. The final system was tested on eight blind persons in real environments.

According to Revuelta, “the aim of the system is to complement a cane or a guide dog, and not in any way replace them.” The estimated price of 250 euros is “very economical compared with other systems that are currently on the market.”

However, the project currently has no funding, he told KurzweilAI. “We expect to find some financial support to finish it and offer a commercial version.”

Abstract of Thesis

The project developed in this thesis involves the design, implementation and evaluation of a new technical assistance aiming to ease the mobility of people with visual impairments. By using processing and sounds synthesis, the users can hear the sonification protocol (through bone conduction) informing them, after training, about the position and distance of the various obstacles that may be on their way, avoiding eventual accidents. In this project, surveys were conducted with experts in the field of rehabilitation, blindness and techniques of image processing and sound, which defined the user requirements that served as guideline for the design. The thesis consists of three self-contained blocks: (i) image processing, where 4 processing algorithms are proposed for stereo vision, (ii) sonification, which details the proposed sound transformation of visual information, and (iii) a final central chapter on integrating the above and sequentially evaluated in two versions or implementation modes (software and hardware). Both versions have been tested with both sighted and blind participants, obtaining qualitative and quantitative results, which define future improvements to the project.