Disney, CMU researchers build face models that give animators intuitive control of expressions

August 9, 2011

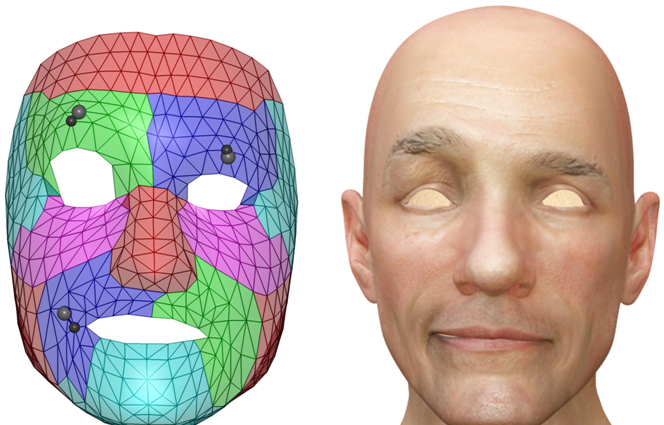

Left: region-based model (black markers show adjustments made by an animator to create a smirk and a wink). Right: result (credit: CMU)

Scientists at Disney Research, Pittsburgh, and Carnegie Mellon University’s (CMU’s) Robotics Institute have created computerized models derived from actors’ faces that reflect a full range of natural expressions while also giving animators the ability to manipulate facial poses.

The researchers developed a method that translates the motions of actors into a three-dimensional face model, and sub-divides it into facial regions that enable animators to intuitively create the poses they need. They recorded facial motion-capture data from a professional actor as he performed sentences with emotional content, localized actions and random motions. To cover the whole face, 320 markers were applied to enable the camera to capture facial motions during the performances.

The data from the actor was then analyzed using a mathematical method that divided the face into regions, based in part on distances between points and in part on correlations between points that tend to move in concert with each other. These regional sub-models are independently trained, but share boundaries.

The result was a model with 13 distinct regions. The researchers said that more regions would be possible by using performance capture techniques that can provide a dense reconstruction of the face, rather than the sparse samples produced by traditional motion capture equipment.

Their work will presented Aug. 10 at SIGGRAPH 2011, the International Conference on Computer Graphics and Interactive Techniques in Vancouver.

region-based model; black markers show adjustments made by an animator to create a smirk and a wink.