How to supervise a robot with your mind and hand gestures

June 22, 2018

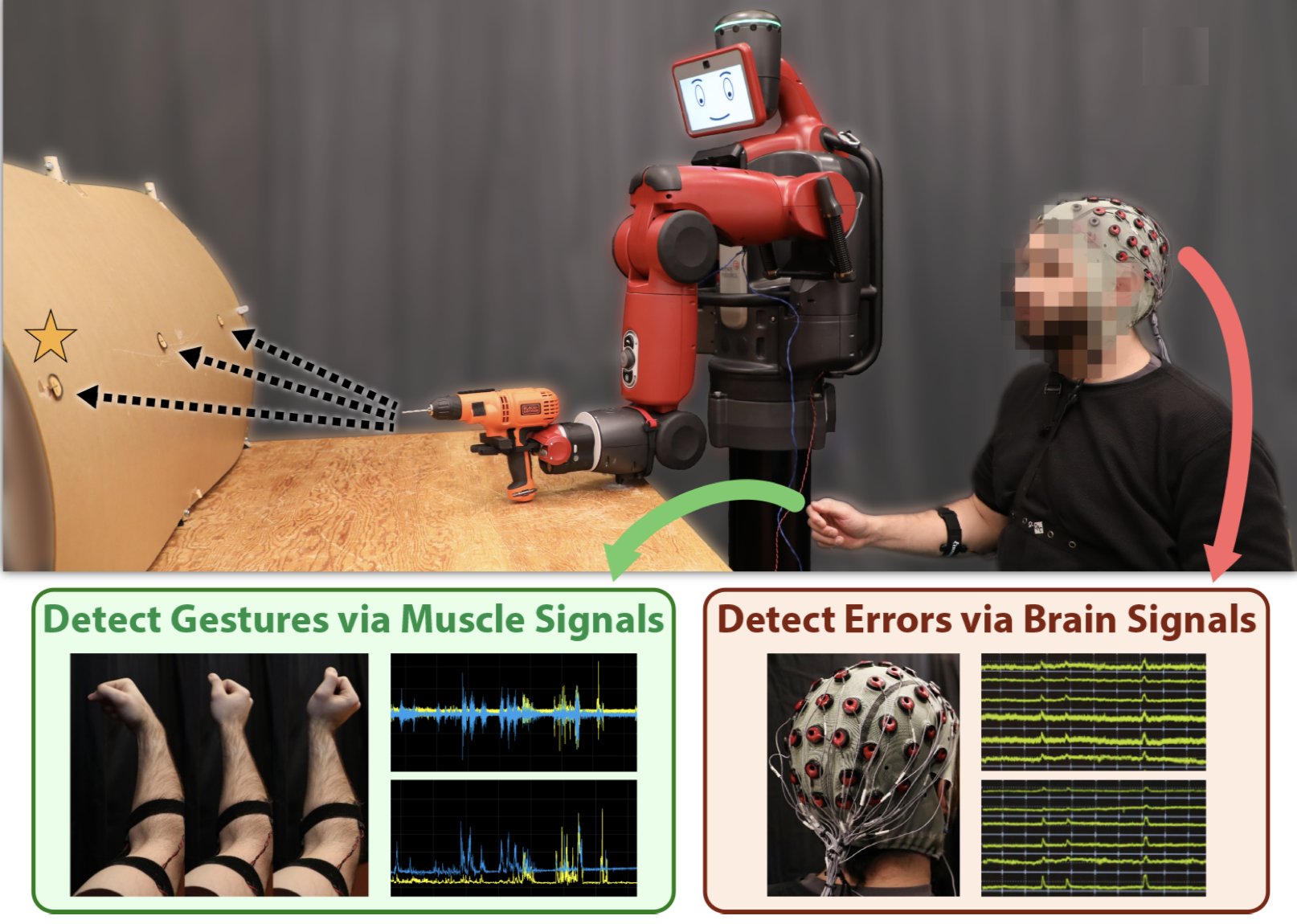

A user supervises and controls an autonomous robot using brain signals to detect mistakes and muscle signals to redirect a robot in a task to move a power drill to one of three possible targets on the body of a mock airplane. (credit: MIT)

Getting robots to do things isn’t easy. Usually, scientists have to either explicitly program them, or else train them to understand human language. Both options are a lot of work.

Now a new system developed by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), Vienna University of Technology, and Boston University takes a simpler approach: It uses a human’s brainwaves and hand gestures to instantly correct robot mistakes.

Plug and play

Instead of trying to mentally guide the robot (which would require a complex, error-prone system and extensive operator training), the system identifies robot errors in real time by detecting a specific type of electroencephalogram (EEG) signal called “error-related potentials,” using a brain-computer interface (BCI) cap. These potentials (voltage spikes) are unconsciously produced in the brain when people notice mistakes — no user training required.

If an error-related potential signal is detected, the system automatically stops. That allows the supervisor to correct the robot by simply flicking a wrist — generating an electromyogram (EMG) signal that is detected by a muscle sensor in the supervisor’s arm to provide specific instructions to the robot.*

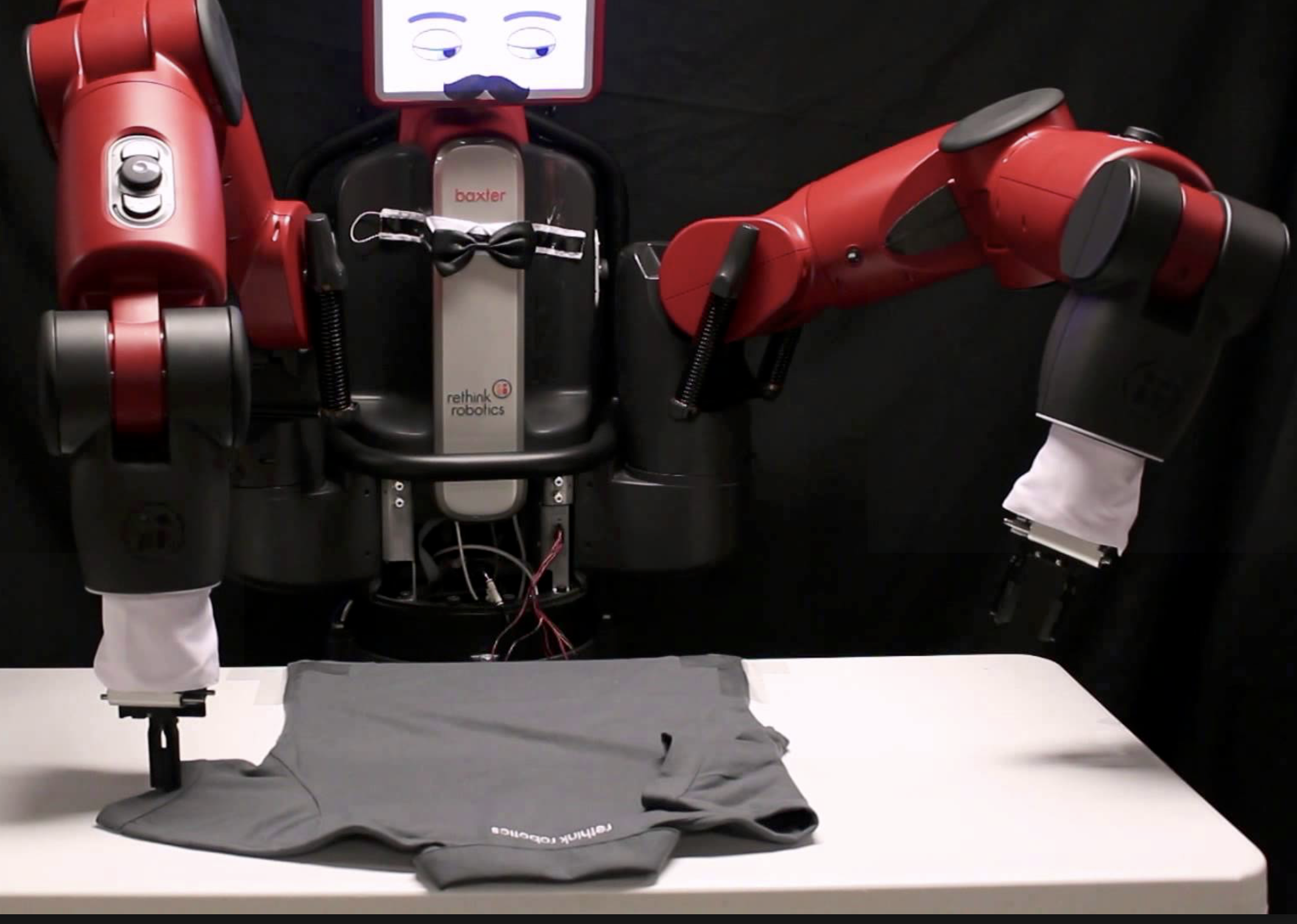

To develop the system, the researchers used “Baxter,” a popular humanoid robot from Rethink Robotics, shown here folding a shirt. (credit: Rethink Robotics)

Remarkably, the “plug and play” system works without requiring supervisors to be trained. So organizations could easily deploy it in real-world use in manufacturing and other areas. Supervisors can even manage teams of robots.**

For the project, the team used “Baxter,” a humanoid robot from Rethink Robotics. With human supervision, the robot went from choosing the correct target 70 percent of the time to more than 97 percent of the time in a multi-target selection task for a mock drilling operation.

“This work combining EEG and EMG feedback enables natural human-robot interactions for a broader set of applications than we’ve been able to do before using only EEG feedback,” says CSAIL Director Daniela Rus, who supervised the work. “By including muscle feedback, we can use gestures to command the robot spatially, with much more nuance and specificity.”

“A more natural and intuitive extension of us”

The team says that they could imagine the system one day being useful for the elderly, or workers with language disorders or limited mobility.

“We’d like to move away from a world where people have to adapt to the constraints of machines,” says Rus. “Approaches like this show that it’s very much possible to develop robotic systems that are a more natural and intuitive extension of us.”

Hmm … so could this system help Tesla speed up its lagging Model 3 production?

A paper will be presented at the Robotics: Science and Systems (RSS) conference in Pittsburgh, Pennsylvania next week.

Ref.: Robotics: Science and Systems Proceedings (forthcoming). Source: MIT.

* EEG and EMG both have some individual shortcomings: EEG signals are not always reliably detectable, while EMG signals can sometimes be difficult to map to motions that are any more specific than “move left or right.” Merging the two, however, allows for more robust bio-sensing and makes it possible for the system to work on new users without training.

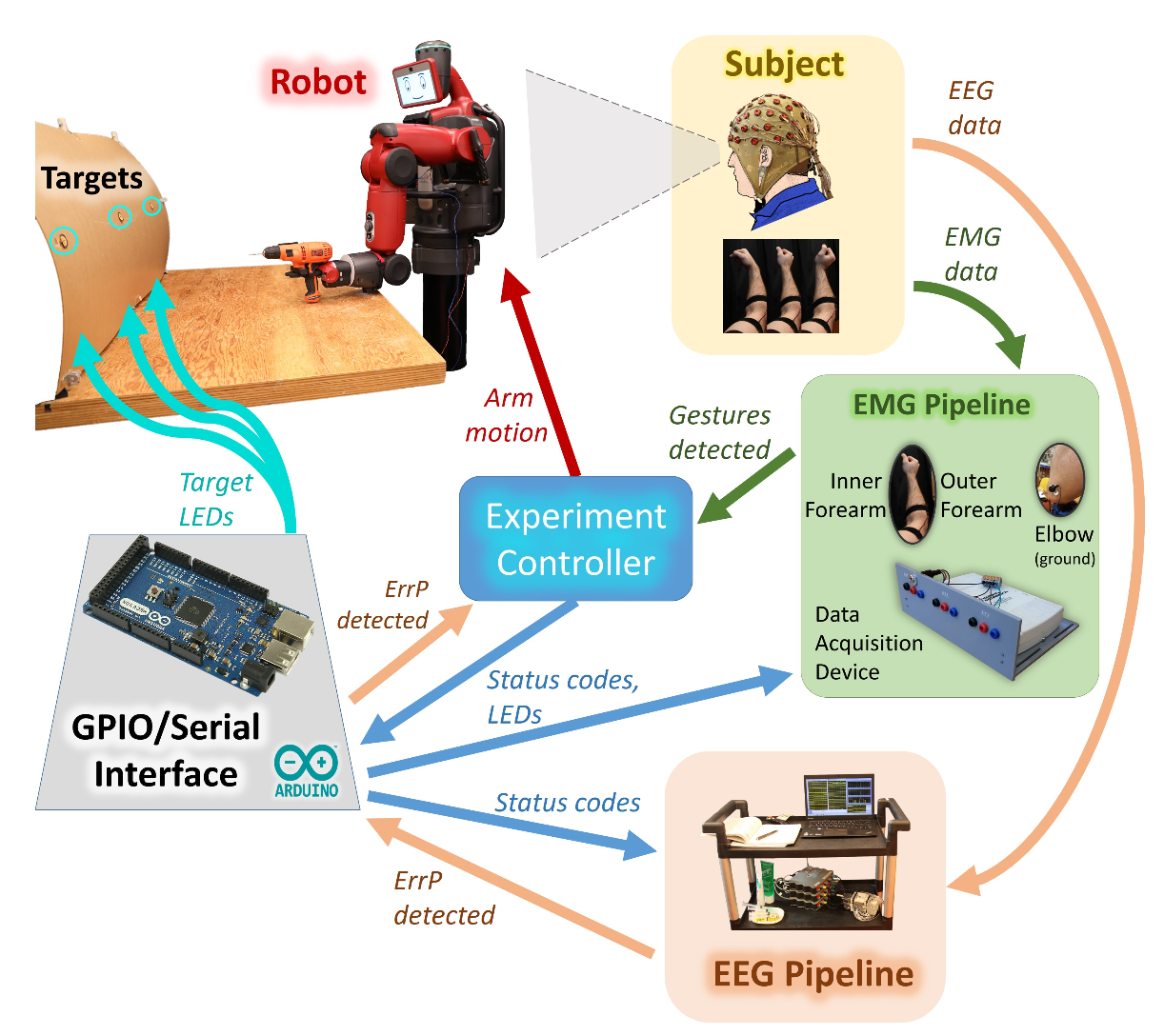

** The “plug and play” supervisory control system

“If an [error-related potential] or a gesture is detected, the robot halts and requests assistance. The human then gestures to the left or right to naturally scroll through possible targets. Once the correct target is selected, the robot resumes autonomous operation. … The system includes an experiment controller and the Baxter robot as well as EMG and EEG data acquisition and classification systems. A mechanical contact switch on the robot’s arm detects initiation of robot arm motion. A human supervisor closes the loop.” — Joseph DelPreto et al. Plug-and-Play Supervisory Control Using Muscle and Brain Signals for Real-Time Gesture and Error Detection. Robotics: Science and Systems Proceedings (forthcoming). (credit: MIT)